Lessons I Have Learned About Enterprise Analytics, Part 1

Introduction

Working with a tool like CRM Analytics often starts with simple use cases: we are getting used to denormalized data, figuring out the relationship between widgets and queries, and scratching the surface of the visualization-layer vs data-layer computation considerations. The principles we learn from these first explorations hold true as our Analytics implementation begins to expand, but at some point every implementation crosses a threshold after which we have to manage not only the details of new source objects and derived fields, but also considerations like the overall performance of our Recipes and Dashboards, where our implementation is sitting relative to governor limits, and who is able to make changes.

To start off our first technical blog series, I’d like to focus on some of the lessons I have learned specifically from working with large-scale Analytics implementations. While I have been working primarily in CRM Analytics, the lessons (if not the terminology) apply in Tableau, PowerBI, or the analytics engine of your choice. My hope is that my fellow builders can apply these lessons early in their builds, so their environments are ready to scale as the requests come in.

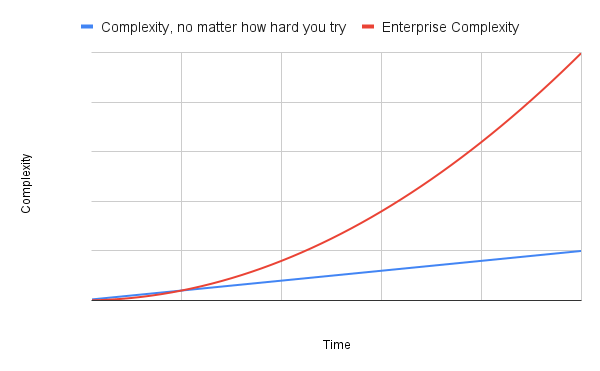

Complexity Only Moves One Direction

In the first entry in this series, I want to focus on a lesson that may seem obvious at first, but understanding it on a practical level as early as possible can save untold hours, stress, and confusion.

Our clients’ businesses are growing and changing all the time. They are adding new fields, new objects, and new automations, and they need analytics that can both adapt to and reflect the impact of these changes. My team gets requests for new derived fields almost daily, and part of our job is to know what already exists, and what might make good input to the new requested field. All else being equal, this kind of growth is linear and manageable in the short term.

As organizations scale, even linear growth brings performance issues and governor limit considerations. We need to be able to identify data processing bottlenecks and apply tactics to work through them. Sometimes we will factor out repeated data processing into a staged Dataset. Sometimes we will move a rollup from a Recipe to a formula field in the core org. Sometimes we will optimize our joins by removing unused fields or adding keep/drop transforms to manage new fields being added.

Although we like to save major refactoring projects like API name changes or removing unused fields for quieter times in the business cycle, we have come to include smaller optimizations as part of our ongoing builds. This “always be refactoring” mindset is something we have developed over time, as it is a lot less important early in the build, when validation, robustness, and clarity take precedence over performance. However, in the long term, managing complexity becomes the central architectural consideration of every Analytics implementation.

Day-to-day, there’s one tactic I find most impactful for readying a build for eventual full-scale complexity: Rename everything. Adding a meaningful API name prefix to every join not only identifies off-grain fields, but it can also greatly increase the flexibility of your Dataset. For example, most Salesforce Objects have lookups to the User object that represent the Owner and the Creator of the record. In CRM Analytics, if we were to use the default API name prefixes in brand new join nodes, we would get field names like User.Name and User1.Name. These are not helpful. In contrast, if we update those API name prefixes, we can create much more readable fields like Owner.Name and Creator.Name.We should be creating field names that our teammates, successors, and future selves will still find clear months or years from now.

Conclusion

The challenges we face years into a large Analytics implementation look very different from the challenges we face with a greenfield build. Every consultant and developer should have taken the time to build a rapport with their clients, as conversations related to these issues can be both very technical and very important for everyone involved to understand. Should we fail to face these challenges early on, we can slow progress with repeated or non-obvious changes, we can minimize maintainability by limiting the number of people who understand a solution, and in the worst case, we can introduce technical limitations beyond those imposed by the platform. All of these consequences incur significant costs, so our hope with these lessons is for analysts and developers to adopt them early during implementation to avoid as many of those costs as possible.

In our next entry in this series on Lessons of Enterprise-scale Analytics, we’ll talk about understanding the data model along with how the visualization relates to other aspects of analytics. In the meantime, if you are experiencing challenges with your CRM Analytics solution, we would be happy to assist. Reach out today via our contact page and we’ll follow up for a conversation.